Building your first agentic AI app with Docker’s trusted tools and workflows is much easier than it looks — you can go from “idea” to a locally running, tool‑using AI agent with a single docker compose up.

Below is a practical, step‑by‑step guide that shows how to design, containerize, and run a simple agentic app using Docker’s emerging AI tooling: Docker Model Runner, Docker Compose for agents, and an MCP‑enabled tool layer.

What “agentic” really means

Most developers encounter LLMs first as simple chat or completion APIs, but agentic apps are different. Instead of just generating text, an agent can plan, call tools, read and write data, and iterate toward a goal autonomously.

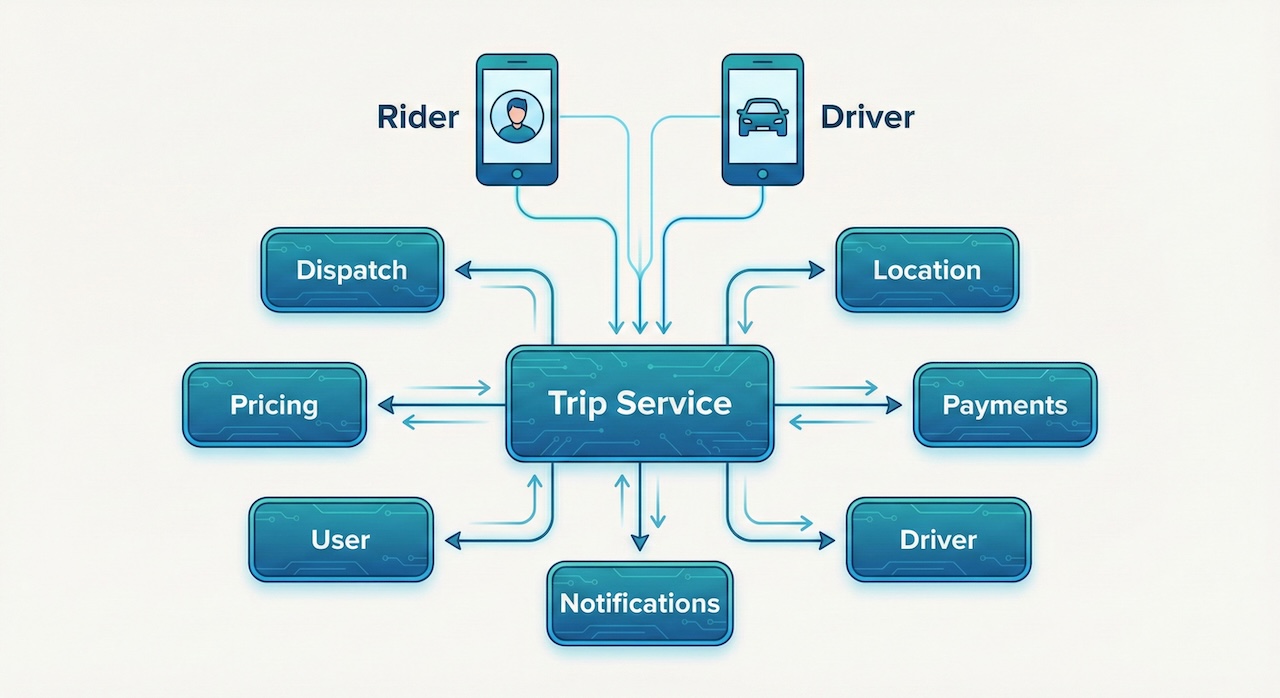

In practice, a basic agentic AI app has three core parts:

- A reasoning engine (LLM) that interprets goals and decides what to do next

- A set of tools (APIs, scripts, services) exposed to the model

- An orchestrator that wires models, tools, and business logic into a coherent workflow

Docker’s AI platform is designed to be that orchestrator glue: it gives you a clean way to package the model, tools, and agent runtime and run them consistently on any machine, local or remote.

Why Docker for agentic AI?

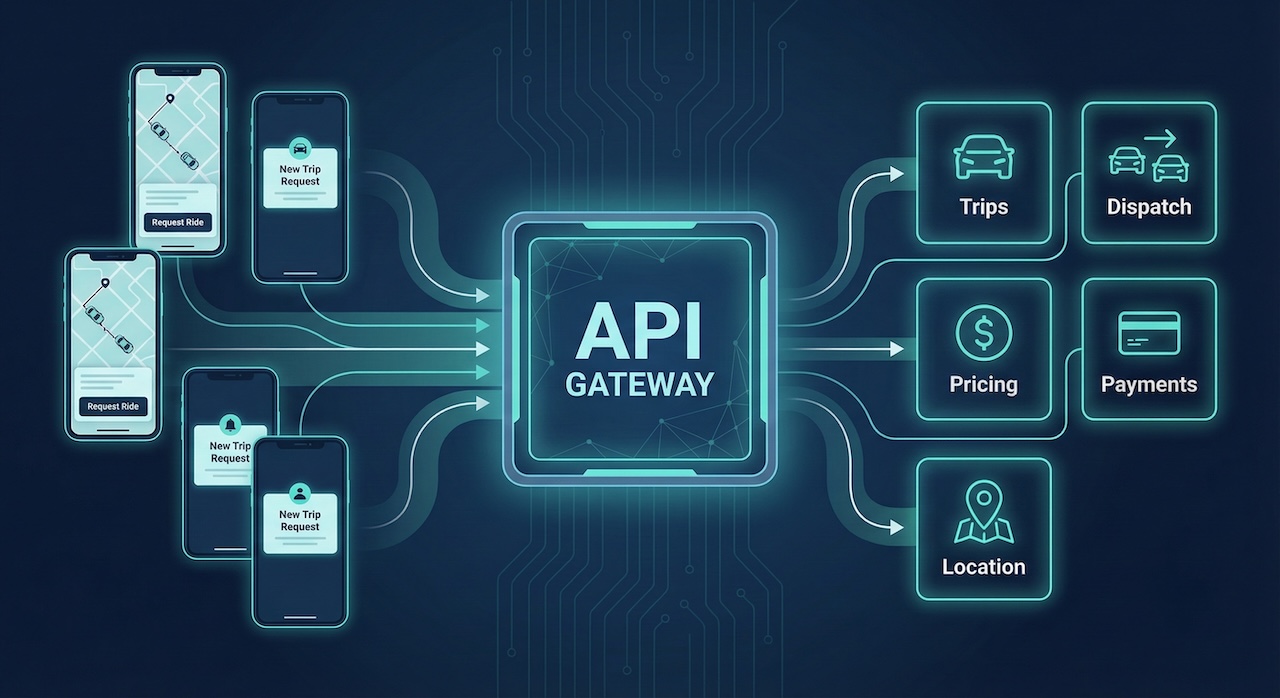

Agentic AI apps are inherently multi‑service: you may have one container for the LLM, one for your agent runtime (LangChain, LangGraph, or a custom loop), one for tools (like an MCP server), plus a frontend or API layer.

Docker simplifies this in three key ways:

- Containerized components: Models, agents, databases, and tool servers all run as isolated containers with reproducible dependencies.

- Compose‑defined workflows: A single compose.yaml can declare models, agents, and MCP tools as first‑class services, so the entire agentic stack comes up with one command.

- Local‑first, cloud‑ready: Docker Model Runner lets you run LLMs locally as OCI images, and Docker Offload can shift heavy workloads to cloud GPUs while you keep the same local workflow.

When your first “toy” agent grows into something your team depends on, the same containers and Compose configuration can be deployed to Kubernetes, Swarm, or any container‑native platform.

The app you’ll build: a local “research helper” agent

To keep things concrete, consider a small but useful app: a local research helper that can:

- Accept a natural‑language query (e.g., “Summarize the docs in ./notes and propose 3 action items”)

- Use an LLM to plan the steps

- Call tools to read local files and generate structured output

- Return a well‑formatted response via a REST API

At a high level, the architecture looks like this:

- Model service: A local LLM exposed through an OpenAI‑compatible HTTP API using Docker Model Runner (or Ollama‑style containers).

- Agent service: A Python agent built with LangChain or LangGraph, speaking to the model via HTTP and to tools via MCP.

- Tool server (MCP): A small FastAPI app exposing filesystem utilities (list_files, read_file, summarize_file) through the Model Context Protocol.

- Optional UI: A simple web front‑end (e.g., Streamlit) or just a REST endpoint you can hit with curl/Postman.

Each of these is a container; Docker Compose ties them together on a shared network.

Step 1: Set up your project structure

Create a new folder for your agentic app and a clean, simple layout:

mkdir docker-agentic-researcher

cd docker-agentic-researcher

mkdir agent mcp_tools

touch compose.yaml

You will place:

- Agent runtime code in agent/

- MCP tool server in mcp_tools/

- One Dockerfile per service (or reuse base images where possible)

Keeping components loosely coupled and separately containerized makes it easier to swap out models or tools later.

Step 2: Run a local model with Docker Model Runner

Docker’s AI platform includes Docker Model Runner, which wraps popular open‑source models as OCI images and exposes them as HTTP APIs. The idea is similar to “LLM as a container”: pull a model image, run it, and you immediately have an OpenAI‑style endpoint.

A minimal service in your compose.yaml might look like:

services:

llm:

image: docker/genai-model-runner:latest

# Example; actual image and model names are defined per Docker's GenAI Stack docs

environment:

MODEL_NAME: qwen2:7b

MODEL_SOURCE: dockerhub

# These envs vary by stack; see Docker GenAI Stack docs

OPENAI_COMPATIBLE: "true"

ports:

- "8080:8080"

With this running, your agent can talk to http://llm:8080/v1/chat/completions (for example) using the same client libraries you’d use for OpenAI.

If you prefer an existing reference implementation, Docker’s GenAI Stack and related templates show ready‑made Compose setups that launch model runners plus supporting components with one click.

Step 3: Create an MCP‑enabled tool server

Modern agentic stacks rely on the Model Context Protocol (MCP) so models can discover and call tools dynamically instead of hard‑coding function schemas. MCP tools are typically implemented as small HTTP servers that publish a tool manifest and handle tool invocations.

Inside mcp_tools/, add:

cd mcp_tools

touch server.py requirements.txt Dockerfile

requirements.txt:

fastapi

uvicorn[standard]

pydantic

server.py (high‑level sketch):

from fastapi import FastAPI

from pydantic import BaseModel

from pathlib import Path

app = FastAPI()

class ToolCall(BaseModel):

tool: str

args: dict

BASE_DIR = Path("/data") # mount your docs here

@app.get("/.well-known/mcp.json")

def mcp_manifest():

# Minimal MCP manifest so the agent framework can discover tools

return {

"name": "filesystem_tools",

"version": "0.1.0",

"tools": [

{"name": "list_files", "description": "List files in the workspace"},

{"name": "read_file", "description": "Read a file's contents"},

],

}

@app.post("/call")

def call_tool(call: ToolCall):

if call.tool == "list_files":

files = [p.name for p in BASE_DIR.glob("*.md")]

return {"result": files}

if call.tool == "read_file":

path = BASE_DIR / call.args.get("filename", "")

if not path.exists():

return {"error": "file not found"}

return {"result": path.read_text(encoding="utf-8")}

return {"error": "unknown tool"}

A simple Dockerfile:

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY server.py .

EXPOSE 9000

CMD ["uvicorn", "server:app", "--host", "0.0.0.0", "--port", "9000"]

This service gives your agent actions it can take in the real world (or at least your local filesystem), all described and discovered via MCP.

Step 4: Implement the agent runtime (Python + LangChain or LangGraph)

Next, build the agent service that:

- Accepts HTTP requests (e.g., POST /query)

- Calls the LLM with a system prompt that explains the tools

- Uses an agent framework to decide when to call MCP tools

- Returns a final answer

In agent/:

cd ../agent

touch agent.py requirements.txt Dockerfile

requirements.txt:

fastapi

uvicorn[standard]

langchain

langchain-openai

httpx

A minimal agent.py (simplified agent loop):

from fastapi import FastAPI

from pydantic import BaseModel

import httpx

from langchain_openai import ChatOpenAI

from langchain.agents import initialize_agent, Tool

MCP_URL = "http://mcp:9000"

app = FastAPI()

class Query(BaseModel):

query: str

async def call_mcp(tool: str, args: dict):

async with httpx.AsyncClient() as client:

resp = await client.post(f"{MCP_URL}/call", json={"tool": tool, "args": args})

resp.raise_for_status()

return resp.json()

async def list_files_tool(_input: str):

result = await call_mcp("list_files", {})

return str(result.get("result", []))

async def read_file_tool(filename: str):

result = await call_mcp("read_file", {"filename": filename})

return result.get("result", "")

tools = [

Tool(

name="list_files",

func=lambda q: list_files_tool(q),

description="List available markdown files in the workspace"

),

Tool(

name="read_file",

func=lambda q: read_file_tool(q),

description="Read a markdown file given its name"

),

]

# LLM using Docker Model Runner as an OpenAI-compatible backend

llm = ChatOpenAI(

base_url="http://llm:8080/v1",

api_key="dummy", # model runner typically ignores this

model="qwen2:7b" # match the model in the llm service

)

agent = initialize_agent(

tools,

llm,

agent="zero-shot-react-description",

verbose=True

)

@app.post("/query")

async def handle_query(payload: Query):

# You can improve this by injecting instructions about using tools

result = await agent.arun(payload.query)

return {"answer": result}

This is intentionally minimal, but it demonstrates the agentic pattern: LLM + tools + orchestration.

A basic Dockerfile:

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY agent.py .

EXPOSE 8000

CMD ["uvicorn", "agent:app", "--host", "0.0.0.0", "--port", "8000"]

Step 5: Wire everything with Docker Compose

Now connect the three services — model, MCP tools, agent — in compose.yaml.

services:

llm:

image: docker/genai-model-runner:latest

environment:

MODEL_NAME: qwen2:7b

MODEL_SOURCE: dockerhub

OPENAI_COMPATIBLE: "true"

ports:

- "8080:8080"

mcp:

build: ./mcp_tools

volumes:

- ./docs:/data # local docs the agent can read

ports:

- "9000:9000"

agent:

build: ./agent

environment:

# Point LangChain's OpenAI client to the model runner

OPENAI_API_KEY: "dummy"

OPENAI_BASE_URL: "http://llm:8080/v1"

depends_on:

- llm

- mcp

ports:

- "8000:8000"

From the project root, launch the full stack:

docker compose up --build

Compose will build the MCP and agent images, start the model runner, and connect everything on a shared network. When the stack is up, test the agent:

curl -X POST http://localhost:8000/query \

-H "Content-Type: application/json" \

-d '{"query": "List the markdown files in my workspace and summarize the first one."}'

You should see the agent calling list_files, then read_file, and finally returning a synthesized summary.

Step 6: Add security, observability, and trusted workflows

Running an agent locally is only step one. Docker’s focus on “trusted tools and workflows” is about making sure the same setup is safe and reproducible in team or production environments.

Some practical upgrades:

- Secrets management: Use Docker secrets or environment variables for API keys and database credentials instead of committing them to Git.

- Resource limits: Configure CPU and memory limits per service in Compose to prevent runaway agents or models from starving other workloads.

- Logging and metrics: Aggregate logs from the agent, model, and tools, and add tracing so you can debug tool calls and LLM decisions.

Docker’s official AI resources and GenAI Stack templates show patterns for exposing structured health endpoints, log aggregation, and GPU configuration that you can adapt to your app.

Step 7: Scale out and go multi‑agent

Once you have a single agent working, Docker’s workflows make it straightforward to scale horizontally or expand into multi‑agent systems.

A few directions to explore:

- Multi‑agent Compose: Define multiple agent services in your Compose file, each with a different role (researcher, summarizer, critic), and coordinate them through a message bus or orchestrator.

- GPU offload: Use Docker Offload or GPU‑enabled model runner images to accelerate larger models without changing your agent code.

- Production orchestration: Deploy the same containers to Kubernetes or Swarm, using Horizontal Pod Autoscalers or Swarm scaling to handle variable traffic.

Recent Docker blogs and webinars on agentic AI highlight these patterns and provide sample Compose files you can clone and adapt.

Where to go next

To deepen this starter app and align more closely with Docker’s official patterns, useful next steps include:

- Studying Docker’s “GenAI vs. Agentic AI” and “Docker for AI” resources to understand how Model Runner, Compose extensions, and Offload are meant to fit together in a production‑grade stack.

- Exploring more complete examples such as local agents with Ollama, LangChain, and MCP that already use Docker Compose to glue agents, tools, and frontends together.

- Watching Docker’s “Build Your First Agentic App with Docker” webinar for an end‑to‑end demo and architecture walkthrough that looks very similar to what you built here.

By combining a local model runner, an MCP tool server, and an agent runtime into a single Docker Compose stack, you have a solid, reproducible foundation for your first agentic AI application — and a clear path from laptop experimentation to trusted, team‑ready workflows.