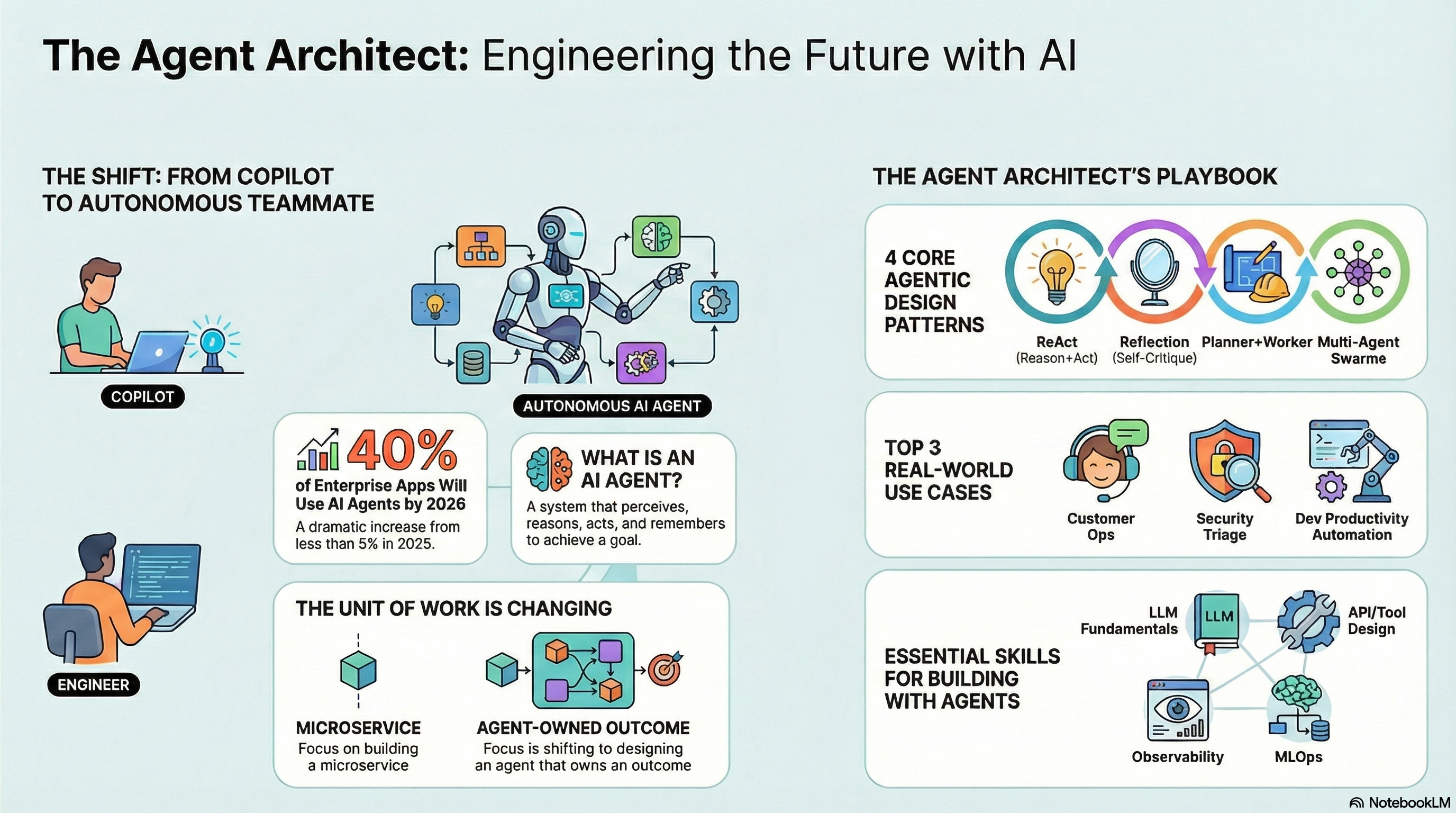

Anthropic’s Claude Skills let you turn messy, one-off agents into reusable “skills” that encode your workflows as code, docs, and checklists. In this guide, you’ll learn what Claude Skills are, how Anthropic uses them to say “don’t build agents, build skills,” and how to build your own Skills with concrete examples and code snippets.

Why Skills Beat Monolithic Agents

Traditional “agents” promise an AI that can do everything: browse, call tools, write code, send emails, and more. In reality, most production teams end up with many custom agents—one per domain, each with its own prompt stack, tool wiring, and bespoke hacks.

This approach has several problems:

- Hard to scale: Every new use case means another custom agent to design, test, and maintain.

- Shallow expertise: Even a great model struggles with complex domains (tax, medicine, trading) without structured institutional knowledge.

- Poor reuse: If you teach one agent how your company does “Quarterly Financial Reporting,” that knowledge rarely transfers to other agents.

Anthropic’s answer is Agent Skills: instead of building more agents, build reusable expertise packages that any general agent can load on demand.

What Is an Agent Skill?

A Skill is just an organized folder that contains:

- A metadata/instructions file (usually skill.md).

- Optional reference docs, checklists, JSON/YAML configs.

- Optional executable scripts (Python, Node, Bash, etc.).

This folder describes how to do a specific kind of work: a workflow, not just static facts. As Anthropic puts it, building a Skill is like writing an onboarding guide for a new hire—but one that a model can read, execute, and update over time.

A minimal Skill might look like this:

financial-reporting-skill/

skill.md

templates/

q1-template.docx

scripts/

generate_report.pyThe core design idea is progressive disclosure. At runtime, the agent only sees lightweight metadata about available Skills; it reads the full instructions and supporting files only when a task actually requires that Skill. This keeps the context window lean while still letting you attach lots of rich knowledge and code.

Skills vs MCP vs Projects vs Custom Agents

Skills are part of a broader ecosystem around Claude and other modern agents.

- MCP (Model Context Protocol) gives the agent connectivity—APIs, databases, SaaS tools, etc.

- Skills give the agent procedural expertise—how to use those tools and how to execute domain workflows step by step.

- Projects / background context provide static knowledge (docs, PDFs, specs) associated with a workspace or chat.

One useful way to think about it:

| Layer | What it provides | Example |

|---|---|---|

| Model | General reasoning & language | “Write a Python function” |

| Agent runtime | Loop + filesystem + code execution | Claude Code environment with a sandboxed FS |

| MCP servers | External connectivity (APIs, DBs, tools) | JIRA server, browser automation, internal APIs |

| Skills | Procedural expertise & workflows | “How we do QBRs” or “How to test our webapp” |

| Custom agents | Prompt/UX wrappers around the above | “Support assistant”, “Dev helper”, etc. |

Instead of shipping a different agent for each use case, you ship:

- One generalized agent runtime.

- A curated set of MCP servers.

- A library of Skills that encode your workflows and best practices.

Skill Anatomy: A Concrete Example

Anthropic’s engineering blog walks through a PDF form-filling Skill backing Claude’s document-editing abilities. Here’s a simplified illustration of how a real Skill might be structured.

Folder structure

pdf-forms-skill/

skill.md

forms.md

scripts/

extract_fields.py

fill_pdf.py

examples/

w9_blank.pdf

w9_filled_example.pdfskill.md – Metadata and core instructions

# PDF Form Filling Skill

## Summary

This skill teaches the agent how to read, understand, and fill PDF forms consistently.

## When to use

Use this skill when the user asks you to:

- Fill out a PDF form.

- Extract form fields from a PDF and map them to user data.

- Validate that all required fields have been populated.

## High-level workflow

1. Inspect the PDF and identify all form fields.

2. Map each field to structured data from the user.

3. Validate required fields and basic formatting.

4. Fill the PDF and export a final version.

For detailed field handling, see `forms.md`.

To work with PDFs programmatically, see scripts in `scripts/`.

At runtime, the agent first sees just the summary/metadata and decides whether to load this Skill for a given task. If relevant, it then reads the detailed instructions in forms.md and uses the helper scripts when needed.

forms.md – Detailed procedural knowledge

# Detailed PDF Form Handling

## Field detection

- Prefer programmatic field extraction over manual inspection.

- Always create a mapping table: PDF field name → semantic meaning → example value.

## Validation rules

- For tax IDs: ensure they contain only digits and hyphens, length 9–11.

- For dates: use ISO format `YYYY-MM-DD` unless the form specifies otherwise.

## Error handling

- If a required field is missing, list the missing fields and ask the user to provide values.

- Never guess legally significant values.

This is where you encode your domain rules, edge cases, and safety constraints in plain language that the model can follow.

Adding Code: Scripts Inside Skills

Skills become much more powerful when you include executable scripts that the agent can call for deterministic operations or heavy lifting. The Skill author can write small utilities in Python, Node.js, Bash, etc., and the agent chooses when to run them.

Example: Python script for parsing and filling PDFs

# scripts/fill_pdf.py

import sys

import json

from pathlib import Path

from some_pdf_lib import PdfForm

def fill_pdf(template_path, output_path, field_values):

form = PdfForm(template_path)

for name, value in field_values.items():

if name in form.fields:

form.fields[name].value = value

form.save(output_path)

if __name__ == "__main__":

# Read JSON from stdin: {"template": "...", "output": "...", "fields": {...}}

payload = json.loads(sys.stdin.read())

template = payload["template"]

output = payload["output"]

fields = payload["fields"]

fill_pdf(template, output, fields)

print(json.dumps({"status": "ok", "output": output}))

In the Claude Code environment, the agent can decide to run this script rather than re-implement PDF parsing in-token every time. This improves reliability and keeps complex logic in regular code where you can test it with normal tools.

Example Skill: Data Analysis + Slide Generation

To show how Skills compose, consider a realistic workflow: “Analyze this CSV dataset and create a slide deck with charts that follow our brand guidelines.” Community discussions highlight similar multi-Skill workflows: a data analysis Skill, a PowerPoint Skill, and a brand-guidelines Skill working together.

You might define three Skills:

- data-analysis-skill

- slide-generation-skill

- brand-guidelines-skill

1. Data Analysis Skill

Folder:

data-analysis-skill/

skill.md

scripts/

summarize_csv.py

generate_charts.py

skill.md:

# Data Analysis Skill

## Summary

This skill helps analyze tabular datasets (CSV, Excel) and produce clean summaries and chart specifications.

## Workflow

1. Inspect the dataset: column names, types, missing values.

2. Identify the user's goal (e.g., trend analysis, comparison, anomaly detection).

3. Use `summarize_csv.py` to compute statistics if needed.

4. Propose 2–4 charts with clear titles and axes.

5. Output: a structured JSON spec for visualizations and a narrative summary.

generate_charts.py (simplified):

# scripts/generate_charts.py

import sys

import json

import pandas as pd

def suggest_charts(path):

df = pd.read_csv(path)

charts = []

# Very naive example: if there's a date column and a numeric column, suggest a line chart.

date_cols = [c for c in df.columns if "date" in c.lower()]

num_cols = df.select_dtypes(include=["number"]).columns.tolist()

if date_cols and num_cols:

charts.append({

"type": "line",

"x": date_cols[0],

"y": num_cols[0],

"title": f"{num_cols[0]} over time"

})

return charts

if __name__ == "__main__":

payload = json.loads(sys.stdin.read())

path = payload["path"]

charts = suggest_charts(path)

print(json.dumps({"charts": charts}))

The agent can call this script, then use the resulting chart specs as inputs for whatever slide generation mechanism exists in another Skill.

2. Slide Generation Skill

This Skill focuses solely on turning structured content into slides.

slide-generation-skill/

skill.md

templates/

default_layout.pptx

scripts/

build_deck.py

skill.md:

# Slide Generation Skill

## Summary

This skill converts structured content (headlines, bullets, chart specs) into a slide deck.

## Input format

Expect a JSON object:

{

"title": "Deck title",

"sections": [

{

"title": "Section title",

"bullets": ["Point 1", "Point 2"],

"charts": [ ... ]

}

]

}

## Workflow

1. Validate the JSON structure.

2. For each section, create one slide.

3. For each chart spec, reserve space on the slide and ensure axes/titles are legible.

4. Save as `output.pptx` and return the path.

The actual chart rendering might be done via Python libraries like python-pptx and matplotlib in build_deck.py, which the agent can invoke. You keep that code in the Skill, not in the agent prompt.

3. Brand Guidelines Skill

This skill encodes your visual identity—colors, fonts, layout rules. Anthropic’s public Skills repo includes similar examples for brand guidelines and themes.

brand-guidelines-skill/

skill.md

palette.json

typography.md

skill.md:

# Brand Guidelines Skill

## Summary

This skill enforces ACME Corp's brand guidelines (colors, typography, layout rules) when creating artifacts.

## Color palette

- Primary: #0055FF (buttons, primary emphasis)

- Secondary: #00C896 (accents)

- Background: #FFFFFF

- Text: #111111

## Usage rules

- Use Primary for titles and key accents.

- Avoid more than 2 accent colors per slide.

- Maintain at least 4.5:1 contrast ratio for text.

## When used with slide-generation

- Apply Primary color to slide titles.

- Use Secondary for chart lines and highlights.

- Keep background white with dark text.

Now a single general-purpose agent, equipped with all three Skills, can:

- Use data-analysis-skill to understand the dataset and propose chart specs.

- Use brand-guidelines-skill to constrain color/typography choices.

- Use slide-generation-skill to build the final deck file.

All without you building a new “Data Analyst Agent” from scratch.

Treating Skills Like Software

As the talk and follow-up commentary point out, serious Skills will take weeks or months to build and maintain—just like production software. That means you need to manage them accordingly:

- Versioning: Track Skill versions and change logs so you know which behaviors changed when.

- Testing & evaluation: Write tests that exercise a Skill against known tasks and compare outputs against expected results.

- Dependencies: Let Skills declare which other Skills, MCP servers, or environment packages they rely on, so behavior is predictable across environments.

A simple skill.json next to skill.md could carry some of this metadata:

{

"name": "data-analysis-skill",

"version": "1.3.0",

"description": "Analyze tabular data and propose charts.",

"dependencies": {

"skills": ["brand-guidelines-skill>=1.0.0"],

"mcp": ["internal-analytics-api"],

"python": [ "pandas>=2.0.0" ]

},

"tests": [

"tests/test_small_dataset.yaml",

"tests/test_missing_values.yaml"

]

}

The agent runtime can then:

- Ensure dependencies are available before using the Skill.

- Run regression tests after upgrading the Skill to a new version.

- Log which Skill versions were active for a given session for auditability.

Enterprise Use Cases: Skills as Collective Memory

In the talk and related content, Anthropic notes that the most enthusiastic adopters so far are large enterprises. They use Skills to:

- Encode organizational best practices (how this company writes incident reports, designs dashboards, or drafts contracts).

- Capture the “weird ways” internal tools are used—custom fields, exceptions, workflows that never made it into official documentation.

- Deploy standardized developer workflows (code style, testing, deployment) to thousands of engineers via a DevProd team.

Over time, you can imagine a “collective company brain”: an evolving Skill library curated by both humans and agents. When a new hire joins, their agent already knows:

- How the team writes RFCs.

- How sprints are run.

- How incidents are triaged.

And as they and the agent refine these Skills, everyone benefits.

Letting Agents Write Their Own Skills

A key long-term bet behind Skills is continuous learning. Because Skills are just folders with instructions and code, they form a natural target for agents themselves to create and refine.

Anthropic even ships a “Skill creator” Skill that guides Claude to turn repeated behaviors into reusable Skills. The workflow might look like:

- You notice Claude repeatedly performing a similar multi-step task for you.

- You ask: “Turn this into a Skill I can reuse.”

- Claude drafts a skill.md, scaffolds folder structure, and proposes scripts or templates.

- You review, tweak, and then add it to your organization’s Skill library.

This gives you a feedback loop where:

- Day 1: The agent knows almost nothing about your org.

- Day 30: The agent has a rich, curated library of Skills distilled from how you actually work.

Practical Getting Started Tips

If you want to adopt this “build skills, not agents” mindset in your own stack, a pragmatic path is:

- Identify a repeatable workflowChoose something you and your team do weekly: generate release notes, triage support tickets, summarize sprint retros, etc.

- Write the human playbook firstIn plain language, write the steps a competent human would follow, including edge cases and failure modes. This becomes your initial skill.md.

- Add small helper scriptsIf any step is deterministic and annoying (sorting entries, normalizing dates, hitting an internal API), write tiny scripts that the agent can call instead of reasoning everything in-token.

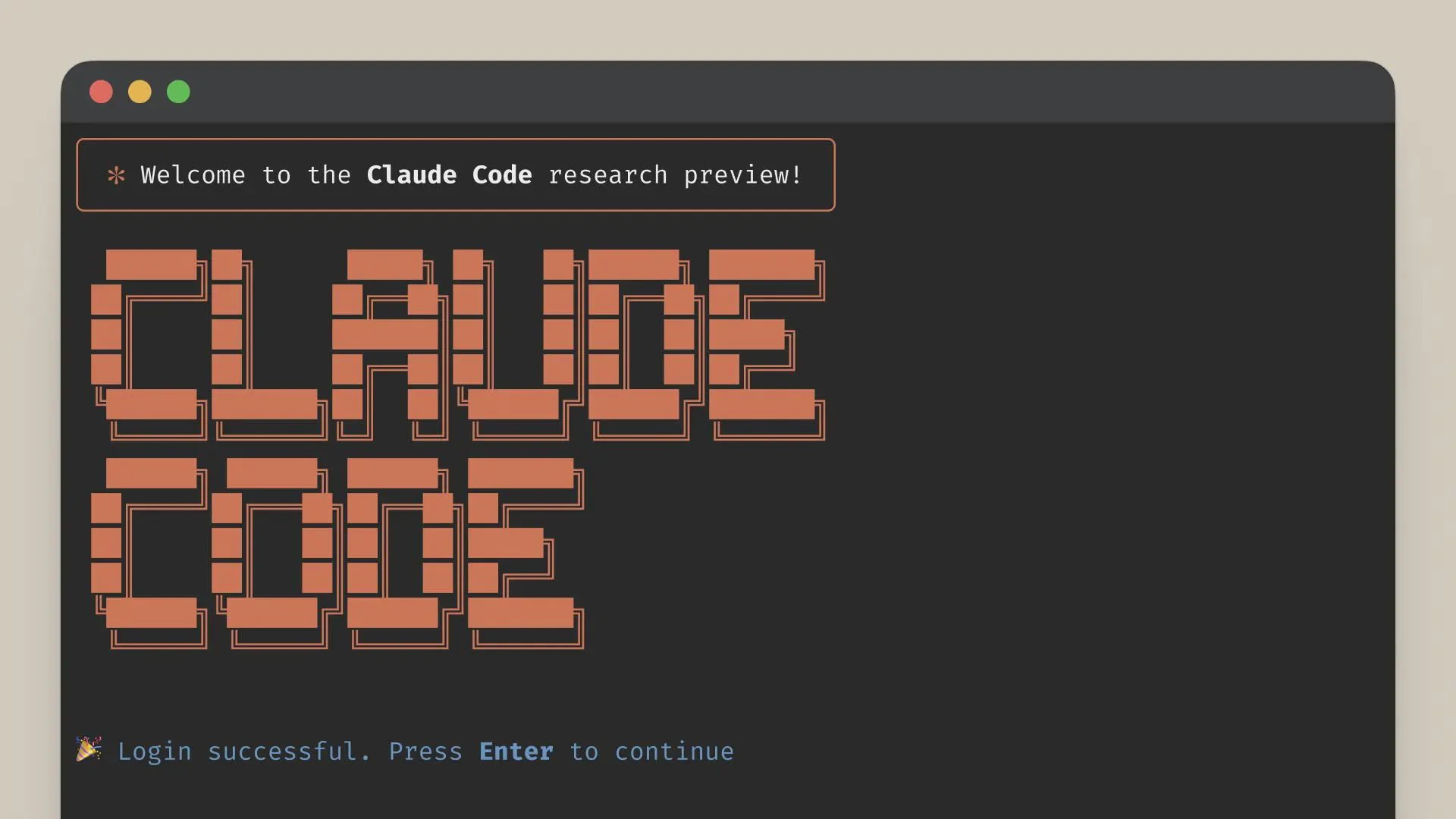

- Wire it into your agent/runtimeWhether you are using Claude Code, your own Node/TS stack, or a Python backend, expose the Skill folder to the agent’s filesystem and follow the docs for Skills usage via API.

- Iterate like codeCollect failed examples.Update the Skill instructions or scripts.Bump the version and (ideally) add a regression test.

Over time, you will see a library of Skills emerge that encode how your organization works, while your agent implementation stays relatively generic.

Closing Thought: The OS Analogy

The talk ends with a helpful analogy:

- Models are like processors: powerful, but limited on their own.

- Agent runtimes are like operating systems: managing tokens, state, and resources.

- Skills are like applications and libraries: where domain expertise and real value live.

Only a few companies will build the “processors” and “OS.” But millions of teams can build Skills that encode their unique workflows and expertise. You do not need another bespoke agent; you need a growing, well-structured folder of Skills.

If you are building AI products today, the most leveraged move is to start that folder now.