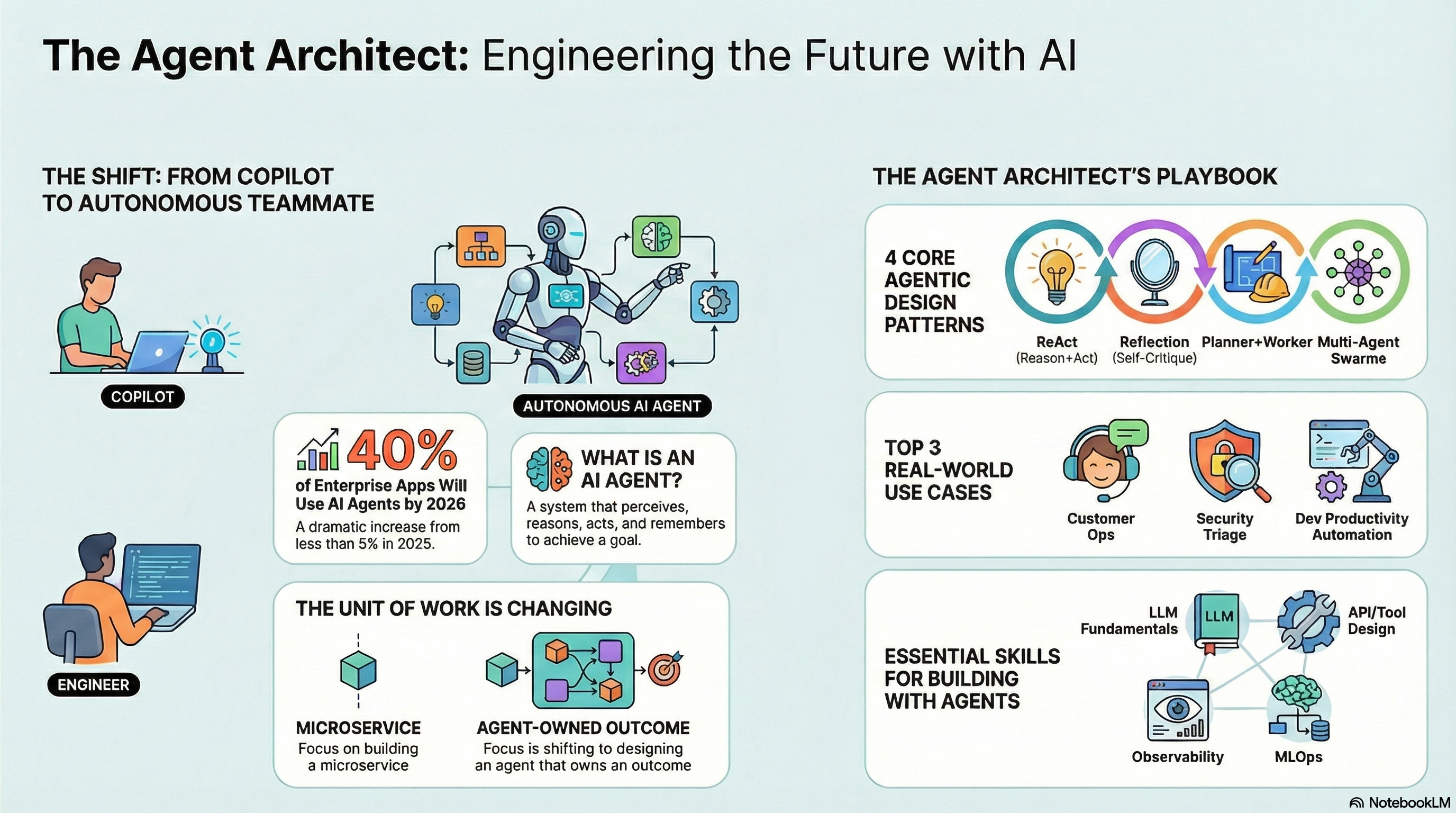

Why Agents Are the New Copilots

Search and trend reports for 2026 show AI moving from “autocomplete for code” to autonomous agents that operate across tools, APIs, and business workflows. Gartner and others expect a huge jump: up to 40% of enterprise apps embedding AI agents by the end of 2026, up from under 5% in 2025.

This matters to engineers because:

- The unit of work is shifting from “function” or “microservice” to “agent that owns an outcome”.

- Teams are expected to design architectures where agents coordinate with services, humans, and other agents.

Agents are becoming a first-class design concern in the same way microservices and REST APIs were a decade ago.

What Exactly Is an AI Agent in 2026?

By 2026, “agent” usually means a system that can perceive, reason, act, and remember over time to pursue a goal, often with tools and APIs at its disposal. Unlike a simple chatbot, an agent has a loop: it observes the world, plans, calls tools, and updates its state until the goal is satisfied.

Common building blocks engineers keep searching for:

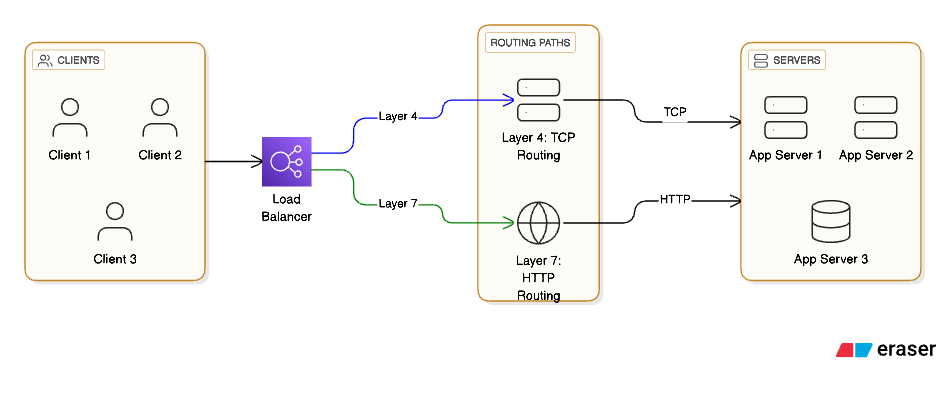

- Tool use: connecting LLMs to HTTP APIs, databases, vector stores, and internal services.

- Memory: short-term (recent steps) and long-term (user preferences, domain knowledge) via embeddings and stores.

- Planning: patterns like ReAct and explicit planners that break goals into smaller steps the agent can execute.

This is why agent work feels closer to system design than prompt design: you are orchestrating state, tools, and policies, not just crafting clever instructions.

Agentic Design Patterns Developers Are Learning

The agentic AI space is converging around a handful of patterns that show up in blogs, talks, and search trends.

Core patterns engineers care about:

- ReAct / Toolformer: interleaving reasoning (“thoughts”) with tool calls to APIs, databases, and services.

- Reflection: agents that critique and revise their own outputs, often via a secondary “critic” model.

- Planner + worker: a planning agent decomposes a task, worker agents execute steps, and a supervisor monitors quality.

- Multi-agent swarms: specialized agents (research, coding, ops, UX) collaborating on larger workflows—analogous to microservices.

Engineers are trying to answer questions like:

- When does one smart agent beat a swarm of dumb ones?

- How do you debug a failing multi-agent workflow?

- Where should you persist state vs. keep it in context?

The answers depend on latency budgets, cost constraints, and the criticality of the workflow, which pushes this firmly into architecture territory.

Real Use Cases: From CRUD to Agent Workflows

Reports from cloud vendors and enterprises show agents stepping into specific, high-value workflows rather than “replacing developers.”

Examples that keep appearing:

- Customer ops agents: triaging tickets, drafting replies, calling internal APIs to fetch account data, and escalating edge cases to humans.

- Security agents: automating alert triage, log correlation, and first-pass investigation so human analysts can focus on threat hunting.

- Dev productivity agents: orchestrating repo search, test execution, infra changes, and release notes generation for a change request.

In all of these, traditional services still matter: REST/GraphQL APIs, event buses, and databases are the stable substrate that agents stand on. The “agentic” part is the orchestration and decision loop layered on top.

Skills Engineers Are Searching for (and Should Learn)

Current skills reports for 2026 show AI, generative AI, cloud-native, DevOps, and cybersecurity at the top of in-demand tech skills. When filtered through the agent lens, a practical upskilling path emerges.

Core skills for agent-native systems:

- LLM and prompt engineering fundamentals: understanding token limits, context windows, system vs. user prompts, and evaluation.

- Tooling and APIs: designing safe, idempotent, well-scoped tools for agents to call; versioning them like any external API.

- Observability and guardrails: logging thoughts, actions, and tool calls; adding policy checks; and setting up human-in-the-loop review for sensitive actions.

- MLOps / model lifecycle: tracking model versions, prompts, and datasets; monitoring drift and failure modes in production.

Soft skills matter too: multiple reports highlight that as AI automates routine coding, engineers who can frame problems, design systems, and communicate trade-offs will be more valuable than those who only know a framework.

Mini Case Study: Turning a Backend Dev into an “Agent Architect”

Picture a mid-level backend engineer in 2024–2025 whose stack is “Python + FastAPI + Postgres + React.” That engineer’s 2026 search history now includes:

- “How to design agent workflows for internal tools”

- “Multi-agent orchestration patterns ReAct planner worker”

- “Observability for AI agents production”

In a typical company:

- The engineer exposes existing domain logic as explicit tools (HTTP endpoints with strict schemas and clear side effects).

- An orchestration layer (Node, Python, or a platform product) wraps an LLM with those tools and a planning strategy.

- They add tracing, logging of thought/action/state, and a review pipeline for high-risk tasks (e.g., finance, security).

The title on the business card might still be “Senior Software Engineer,” but the actual role looks a lot like Agent Architect: designing contracts between agents and systems, not just functions and services.

Conclusion: Stop Optimizing for “Language Dev,” Start Optimizing for “Agentic Systems”

Job market discussions and skills reports for 2026 are blunt: nobody is hiring “React devs” or “Python devs” in isolation; they are hiring people who can solve problems with AI-rich systems. AI agents are becoming the orchestrators of those systems, embedding themselves into enterprise workflows, developer tooling, and even security operations.

For software engineers, this is the trend worth paying attention to—and searching deeply about—right now: learn how to design, debug, and ship agentic architectures, and you future-proof your career beyond the next framework cycle.